Hi,

I would like to achieve the following setup with one-deploy; for hybrid multicloud environments where there can be VMs with different disk perfomances, we usually create differents nfs volumes (for ex):

1.1.1.1:/datastore1/nfs --> SSD disks based

2.2.2.2:/datastore2/nfs --> SATA disks based

The main goal is to be able to create 2 different datastores where to store the disks of the VMs.

I can figure out that this has to be done with the generic datastore mode:

I’ve tried something like this with one-deploy (just ds section):

ds:

mode: generic

config:

IMAGE_DS:

default:

enabled: false

datastore1:

id: 100

managed: true

enabled: true

symlink:

groups: [frontend, node]

src: /mnt/datastore1/100/

template:

TYPE: IMAGE_DS

TM_MAD: shared

datastore2:

id: 101

managed: true

enabled: true

symlink:

groups: [frontend, node]

src: /mnt/datastore2/101/

template:

TYPE: IMAGE_DS

TM_MAD: shared

FILE_DS:

files:

id: 2

managed: true

symlink:

groups: [node]

src: /mnt/datastore1/2/

template:

TYPE: FILE_DS

TM_MAD: shared

fstab:

- src: "1.1.1.1:/datastore1/nfs"

path: /mnt/datastore1

fstype: nfs

opts: rw,nfsvers=3

- src: "2.2.2.2:/datastore2/nfs"

path: /mnt/datastore2

fstype: nfs

opts: rw,nfsvers=3

But the above one-deploy code fails (Provision Datastores task):

TASK [opennebula.deploy.datastore/generic : Ensure /var/lib/one/datastores/ exists] ************************************************************************************************************************************************************

ok: [n1] => changed=false

gid: 9869

group: oneadmin

mode: '0750'

owner: oneadmin

path: /var/lib/one/datastores/

size: 4096

state: directory

uid: 9869

ok: [n2] => changed=false

gid: 9869

group: oneadmin

mode: '0750'

owner: oneadmin

path: /var/lib/one/datastores/

size: 4096

state: directory

uid: 9869

TASK [opennebula.deploy.datastore/generic : Setup datastore symlinks] **************************************************************************************************************************************************************************

skipping: [n1] => (item=system) => changed=false

ansible_loop_var: item

false_condition: _mount_path != '/var/lib/one/datastores'

item: system

skip_reason: Conditional result was False

skipping: [n1] => (item=default) => changed=false

ansible_loop_var: item

false_condition: _mount_path != '/var/lib/one/datastores'

item: default

skip_reason: Conditional result was False

skipping: [n2] => (item=system) => changed=false

ansible_loop_var: item

false_condition: _mount_path != '/var/lib/one/datastores'

item: system

skip_reason: Conditional result was False

skipping: [n2] => (item=default) => changed=false

ansible_loop_var: item

false_condition: _mount_path != '/var/lib/one/datastores'

item: default

skip_reason: Conditional result was False

failed: [n1] (item=datastore1) => changed=false

ansible_loop_var: item

cmd: |-

set -o errexit

if [[ -L '/var/lib/one/datastores/100' ]]; then exit 0; fi

if ! [[ -d '/mnt/datastore1/100' ]]; then

echo "Symlink target does not exist or is not a directory." >&2

exit 1

fi

if [[ -d '/var/lib/one/datastores/100' ]] && ! rmdir '/var/lib/one/datastores/100'; then exit 1; fi

if ! ln -s '/mnt/datastore1/100' '/var/lib/one/datastores/100'; then exit 1; fi

exit 78

delta: '0:00:00.003682'

end: '2025-07-16 16:23:47.857337'

failed_when_result: true

item: datastore1

msg: non-zero return code

rc: 1

start: '2025-07-16 16:23:47.853655'

stderr: Symlink target does not exist or is not a directory.

stderr_lines: <omitted>

stdout: ''

stdout_lines: <omitted>

failed: [n2] (item=datastore1) => changed=false

ansible_loop_var: item

cmd: |-

set -o errexit

if [[ -L '/var/lib/one/datastores/100' ]]; then exit 0; fi

if ! [[ -d '/mnt/datastore1/100' ]]; then

echo "Symlink target does not exist or is not a directory." >&2

exit 1

fi

if [[ -d '/var/lib/one/datastores/100' ]] && ! rmdir '/var/lib/one/datastores/100'; then exit 1; fi

if ! ln -s '/mnt/datastore1/100' '/var/lib/one/datastores/100'; then exit 1; fi

exit 78

delta: '0:00:00.004643'

end: '2025-07-16 16:23:47.916261'

failed_when_result: true

item: datastore1

msg: non-zero return code

rc: 1

start: '2025-07-16 16:23:47.911618'

stderr: Symlink target does not exist or is not a directory.

stderr_lines: <omitted>

stdout: ''

stdout_lines: <omitted>

failed: [n1] (item=datastore2) => changed=false

ansible_loop_var: item

cmd: |-

set -o errexit

if [[ -L '/var/lib/one/datastores/101' ]]; then exit 0; fi

if ! [[ -d '/mnt/datastore2/101' ]]; then

echo "Symlink target does not exist or is not a directory." >&2

exit 1

fi

if [[ -d '/var/lib/one/datastores/101' ]] && ! rmdir '/var/lib/one/datastores/101'; then exit 1; fi

if ! ln -s '/mnt/datastore2/101' '/var/lib/one/datastores/101'; then exit 1; fi

exit 78

delta: '0:00:00.003850'

end: '2025-07-16 16:23:48.046909'

failed_when_result: true

item: datastore2

msg: non-zero return code

rc: 1

start: '2025-07-16 16:23:48.043059'

stderr: Symlink target does not exist or is not a directory.

stderr_lines: <omitted>

stdout: ''

stdout_lines: <omitted>

failed: [n2] (item=datastore2) => changed=false

ansible_loop_var: item

cmd: |-

set -o errexit

if [[ -L '/var/lib/one/datastores/101' ]]; then exit 0; fi

if ! [[ -d '/mnt/datastore2/101' ]]; then

echo "Symlink target does not exist or is not a directory." >&2

exit 1

fi

if [[ -d '/var/lib/one/datastores/101' ]] && ! rmdir '/var/lib/one/datastores/101'; then exit 1; fi

if ! ln -s '/mnt/datastore2/101' '/var/lib/one/datastores/101'; then exit 1; fi

exit 78

delta: '0:00:00.004549'

end: '2025-07-16 16:23:48.154299'

failed_when_result: true

item: datastore2

msg: non-zero return code

rc: 1

start: '2025-07-16 16:23:48.149750'

stderr: Symlink target does not exist or is not a directory.

stderr_lines: <omitted>

stdout: ''

stdout_lines: <omitted>

ok: [n1] => (item=files) => changed=false

ansible_loop_var: item

cmd: |-

set -o errexit

if [[ -L '/var/lib/one/datastores/2' ]]; then exit 0; fi

if ! [[ -d '/mnt/datastore1/2' ]]; then

echo "Symlink target does not exist or is not a directory." >&2

exit 1

fi

if [[ -d '/var/lib/one/datastores/2' ]] && ! rmdir '/var/lib/one/datastores/2'; then exit 1; fi

if ! ln -s '/mnt/datastore1/2' '/var/lib/one/datastores/2'; then exit 1; fi

exit 78

delta: '0:00:00.003522'

end: '2025-07-16 16:23:48.245525'

failed_when_result: false

item: files

msg: ''

rc: 0

start: '2025-07-16 16:23:48.242003'

stderr: ''

stderr_lines: <omitted>

stdout: ''

stdout_lines: <omitted>

ok: [n2] => (item=files) => changed=false

ansible_loop_var: item

cmd: |-

set -o errexit

if [[ -L '/var/lib/one/datastores/2' ]]; then exit 0; fi

if ! [[ -d '/mnt/datastore1/2' ]]; then

echo "Symlink target does not exist or is not a directory." >&2

exit 1

fi

if [[ -d '/var/lib/one/datastores/2' ]] && ! rmdir '/var/lib/one/datastores/2'; then exit 1; fi

if ! ln -s '/mnt/datastore1/2' '/var/lib/one/datastores/2'; then exit 1; fi

exit 78

delta: '0:00:00.004123'

end: '2025-07-16 16:23:48.384477'

failed_when_result: false

item: files

msg: ''

rc: 0

start: '2025-07-16 16:23:48.380354'

stderr: ''

stderr_lines: <omitted>

stdout: ''

stdout_lines: <omitted>

In the Host Nodes, you can see both datastores mounted correctly:

1.1.1.1:/datastore1/nfs 187G 0 187G 0% /mnt/datastore1

2.2.2.2:/datastore2/nfs 187G 0 187G 0% /mnt/datastore2

In fact, in you see in the /var/lib/one/datatores/ folder, you can see this:

root@host1:~# ls -lh /var/lib/one/datastores/

total 0

lrwxrwxrwx 1 root root 33 Jul 16 15:42 2 -> /mnt/datastore1/2

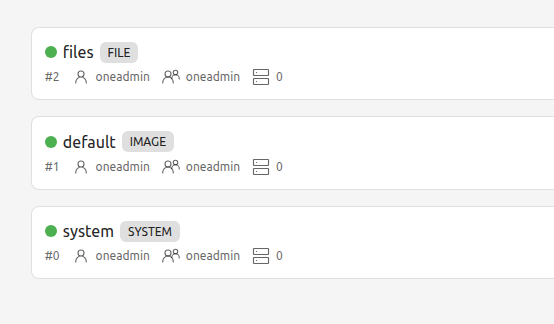

And if you see in the OpenNebula UI you can see just the three default:

How can we have multiple nfs datastores and multiple IMAGES datastores?