Hi,

I’ve been dealing with a very rare connectivity issue:

I have created in Opennebula the VLAN 30 (VN_MAD 802.1Q, PHYDEV enp5s0f1 ) in Ethernet mode, because the DHCP is the router.

I’ve attached this network to a Windows Server VM in Opennebula, and all is working fine (connectivity is OK, DHCP working, etc…).

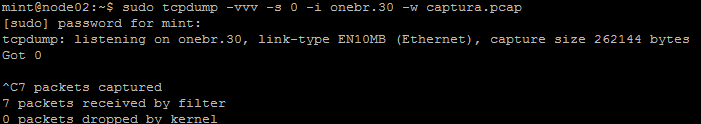

Then, I’ve created some Ubuntu VM, and attached to the same network. The VM seems to be working fine and even gets an IP address from my external DHCP, but I can’t ping or SSH to the VM. I’m attaching a screenshot where you can see how the VM has Reception but not Transmission.

Of course Security Group is the same for all VMs (also they have the same network). And of course the subinterface of the host is correct:

enp5s0f1.30 Link encap:Ethernet HWaddr 18:a9:05:41:ca:4f

inet6 addr: fe80::1aa9:5ff:fe41:ca4f/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:28432 errors:0 dropped:0 overruns:0 frame:0

TX packets:25263 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:37414700 (37.4 MB) TX bytes:3566314 (3.5 MB)

-

It’s normal that Opennebula “can’t see” the IP obtained by DHCP by the VM? I think this is the normal behaviour but I’m not sure.

-

Why is this happening only with Ubuntu VMs and not with the other VM?